From Bronx Science to Taskade Genesis: Connecting the Dots of AI

The Real Origin of AI

Most people think artificial intelligence was born in Silicon Valley or MIT’s ivory towers.

They’re wrong.

AI’s real origin story starts in a New York City public high school: Bronx High School of Science. Two kids walked those halls in the 1940s, two years apart:

Frank Rosenblatt (Class of ’46) invented the perceptron in 1957, the first artificial neural network. Marvin Minsky (Class of ’44) co-founded MIT’s AI Lab, champion of symbolic AI, and the man who nearly buried neural nets with a single book.

Their feud, neurons vs. symbols, connectionism vs. logic, defined AI for half a century.

That’s the Bronx Science legacy: a school where teenagers casually invented the future, disagreed violently about it, and left the rest of the world to fight over the ruins.

Decades later, I walked those same halls.

My Bronx Science Story

I didn’t care about AI history when I was there.

I cared about keeping servers alive.

I was commuting two hours a day from Forest Hills, spending late nights in the computer lab, and running a web hosting + video streaming business while my classmates were grinding SAT vocab lists. My stack looked like this:

- Physical servers I could barely afford, duct-taped together.

- VPS slices carved into existence with more hacks than best practices.

- FFMPEG pipelines crashing when someone uploaded a cursed AVI file.

- Customers who didn’t care if I was a high schooler.

Every outage was a fire. Every support ticket was a gut punch.

And that’s how I learned three lessons more valuable than any AP class:

- Systems break. Always. Build for failure.

- Nobody cares about your tech stack. They care if it works.

- Execution beats theory, every single time.

Those lessons burned into me before I could legally drive a car. I didn’t realize it then, but they were the same lessons AI itself was learning in the wilderness years.

The Perceptron’s Rise and Fall

Frank Rosenblatt’s idea was radical: a machine that could learn, not by following rules, but by adjusting weights, like a brain. The perceptron was simple:

- Inputs with weights

- Sum them

- Apply a threshold

- Adjust based on error

In 1957, Rosenblatt unveiled the Mark I Perceptron, a room-sized contraption with 400 photocells wired to motors. It could learn to recognize shapes.

The New York Times headline?

“Perceptron, embryo of an electronic computer that will walk,

talk, see, write, reproduce itself, and be conscious of its existence.”

It was hype. And hype is dangerous.

Marvin Minsky, fellow Bronx Science alum, symbolic AI evangelist, struck back. His book Perceptrons (1969, co-authored with Seymour Papert) mathematically proved single-layer perceptrons couldn’t solve XOR.

He wasn’t wrong. But the effect was devastating. Funding vanished. Neural nets were dismissed as a dead end. The field entered its first AI Winter.

And Rosenblatt? He died in 1971 in a boating accident at 43. He never lived to see his vision resurrected. Minsky lived until 2016, long enough to watch deep learning eat the world.

He never fully admitted he was wrong.

Two Bronx Science kids. Same hallways. Polar opposites. Both right. Both wrong.

The Perceptron’s Revenge

The perceptron didn’t die. It waited.

In 1986, Geoffrey Hinton and colleagues published the backpropagation algorithm. Suddenly, multi-layer perceptrons could solve XOR and much more.

Then came GPUs, big data, and algorithmic tricks like dropout and attention.

By 2012, AlexNet blew ImageNet wide open. Neural nets crushed benchmarks across computer vision, speech, and language.

By 2017, transformers changed everything. Self-attention replaced recurrence, scaling soared, and the stage was set for GPT.

By 2023, ChatGPT brought AI into the mainstream.

And here’s the irony: every transformer, every LLM, every “GenAI” breakthrough is still built on perceptrons. Billions of them stacked together, firing in parallel, adjusting weights.

Rosenblatt’s “toy” became the engine of modern civilization.

Minsky’s rules live on too in attention, logic layers, structured reasoning.

The Bronx Science feud resolved itself into synthesis.

The Execution Layer

Fast forward to today.

LLMs are powerful. But they’re trapped in demos. Clever chatbots, neat proofs of concept, “AI assistants” that collapse under real-world complexity.

The missing piece? Execution.

Rosenblatt wanted machines that act. Not just predict text. Not just generate code. Act.

That’s where Taskade Genesis comes in.

Genesis is the execution layer where humans and AI collaborate to actually get things done.

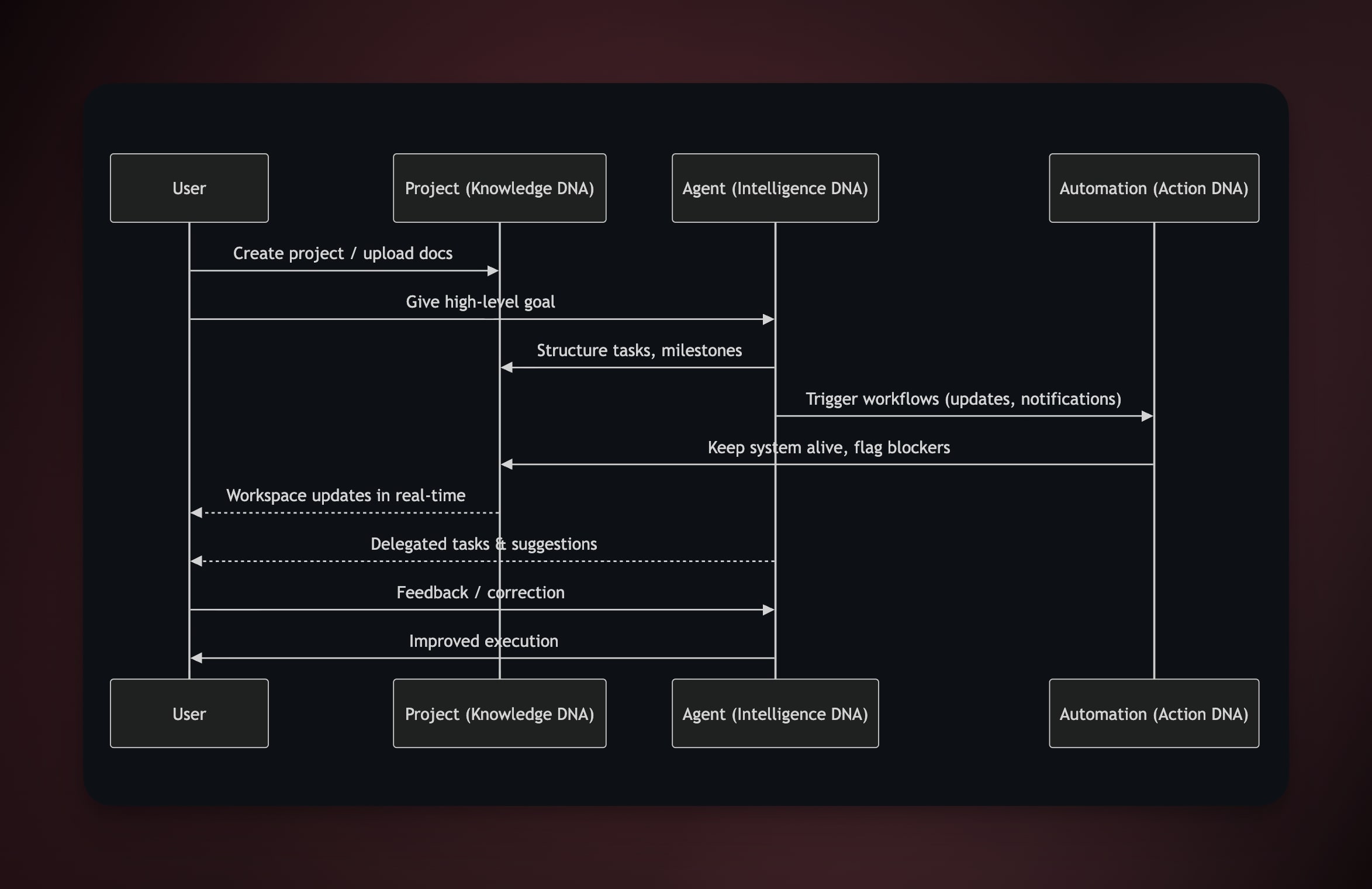

Projects → Knowledge DNA (documents, notes, and tasks = structured memory).

Agents → Intelligence DNA (AI teammates trained on your work, not just a prompt window).

Automations → Action DNA (always-on workflows that execute without babysitting)

One prompt → a living workspace.

Not a paragraph. Not a suggestion. A system that plans, delegates, and ships.

This is what Rosenblatt was dreaming of: not electronic brains locked in labs, but learning systems embedded in human workflows.

Connecting My Dots

When I look back, the threads are clear:

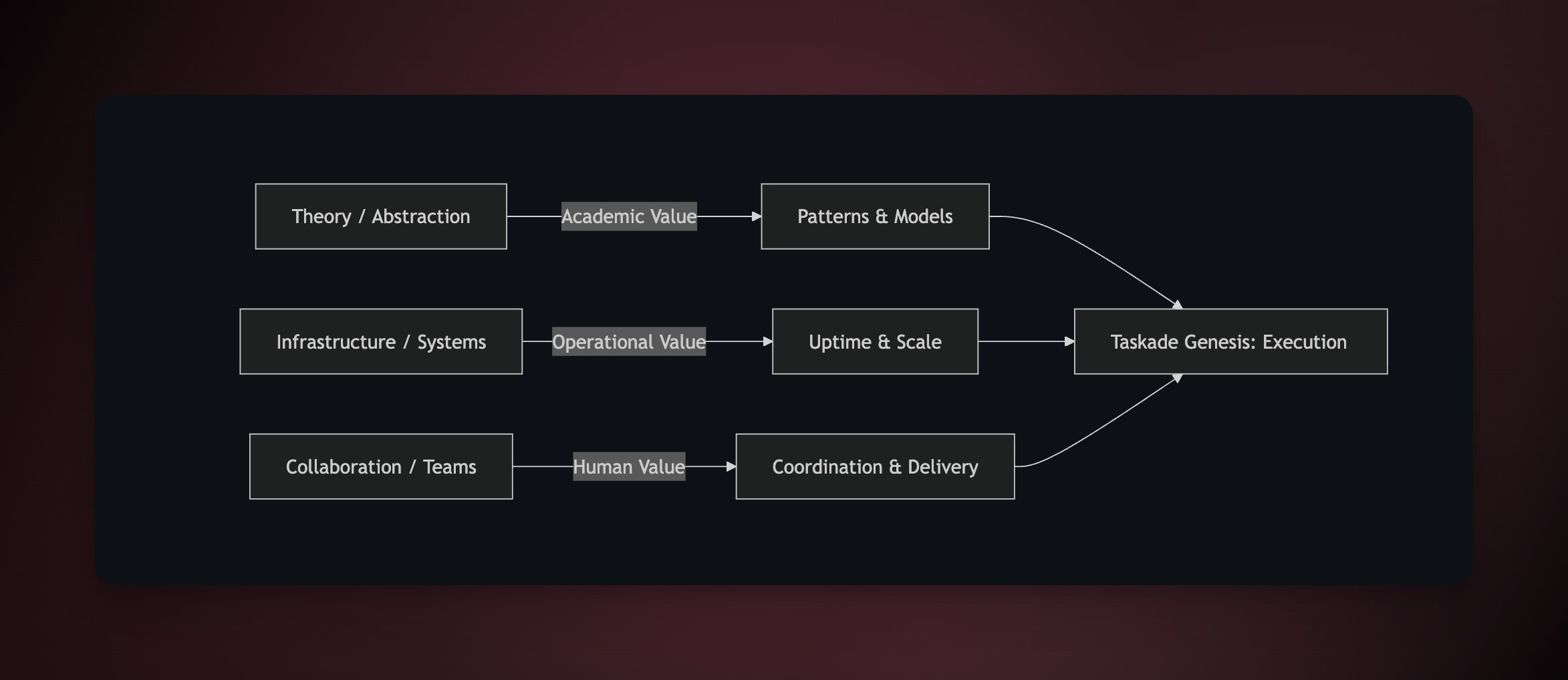

Bronx Science taught me abstraction. Find the pattern or drown in chaos.

The hosting hustle taught me infrastructure. Uptime is everything.

Taskade taught me collaboration. The bottleneck isn’t intelligence, it’s coordination.

Abstraction. Infrastructure. Collaboration.

That’s the DNA of Taskade Genesis.

Rosenblatt knew it.

Minsky fought it.

I lived it.

Why This Matters

People talk about the PayPal Mafia. The Fairchild Eight.

Nobody talks about the Bronx Science Mafia.

But it’s real:

Rosenblatt and Minsky — two teenagers from the Bronx who accidentally set the trajectory of AI. Generations of builders trained to survive by finding patterns in chaos.

And now, me — a kid from Forest Hills who kept servers alive at Bronx Science and ended up building the execution layer for AI.

Not because I was supposed to.

Because Bronx Science drills into you that systems can always be hacked.

Closing the Circle

The perceptron took 70 years to go from Rosenblatt’s lab to ChatGPT in your pocket.

The next leap, from AI as tool to AI as teammate, won’t take 70. It’ll take 7. Maybe less.

Taskade Genesis is that leap.

Not theory. Not hype. Execution.

Because that’s the Bronx Science way:

- Find the pattern.

- Build the system.

- Ship it before anyone realizes what happened.

Epilogue: Irony in the Details

In 2013, Google bought DeepMind — a pure neural network shop — for $500 million.

In 2016, the year Minsky died, AlphaGo beat Lee Sedol at Go using deep neural networks.

Rosenblatt never saw his vindication.

Minsky lived long enough to watch the “dead end” consume the world.

And me? I’m building on their shoulders, in the same Bronx Science lineage, with Genesis — the execution layer where perceptrons finally fulfill their promise.

The perceptron didn’t die.

It was just waiting.

Now it’s awake.

John Xie is the founder and CEO of Taskade. He attended Bronx High School of Science, where he ran a video hosting business between classes and learned that execution beats theory every time. Taskade Genesis is his answer to 70 years of AI evolution: stop talking about intelligence, start shipping execution.

Read more: Chatbots Are Demos. Agents Are Execution. | From Web Hosting to AI Infrastructure