What is Anthropic? Complete History: Claude Code, Opus, Sonnet, Constitutional AI & More (2026)

The complete history of Anthropic from founding to Claude AI, Constitutional AI, Opus 4.5, Claude Code, computer use, and the race to safe AGI. Updated January 2026 with latest developments.

On this page (19)

Anthropic is an AI safety company that aims to build "reliable, interpretable, and steerable AI systems." Last year's Claude AI and Constitutional AI framework managed to position the company as OpenAI's most formidable challenger, and now the startup is looking to redefine the AI landscape with a recent $350 billion valuation.

But where did it all start? What makes Anthropic different? How does Constitutional AI work? In today's article, we take a deep dive into the history of Anthropic and where it's heading. 🔮

🤖 What Is Anthropic?

Anthropic came to life in 2021 in San Francisco as a joint initiative of former OpenAI researchers, led by siblings Dario Amodei and Daniela Amodei. The mission was clear—develop safe, steerable AI systems that prioritize alignment with human values over raw capability.

"Our company's work is a long-term bet that AI safety problems are tractable, and that the theoretical and practical tools we're building today will become critical in a world with broadly capable AI systems."

Anthropic Safety Research Statement

The company has since developed an impressive lineup of AI models including Claude (named after Claude Shannon, the father of information theory), Constitutional AI, and Claude Code—an agentic coding tool that lives in your terminal.

But all those things were just the beginning.

In 2024-2025, Anthropic emerged as OpenAI's most serious competitor thanks to Anthropic Claude Opus (latest), Anthropic Claude (latest) Sonnet, and breakthrough features like computer use. With backing from Amazon, Google, Microsoft, and Nvidia totaling over $37 billion, the company is now valued at a staggering $350 billion as of January 2026.

So, let's wind back the clock and see where it all started.

🥚 The History of Anthropic

The Early Days of AI Safety Research

Before Anthropic, there was a growing concern in the AI research community about alignment—the challenge of ensuring AI systems do what humans actually want them to do, not just what they're asked to do.

This distinction might sound trivial, but it's profound.

The AI alignment problem emerged from research in the 2000s and 2010s, with thinkers like Stuart Russell, Nick Bostrom, and Eliezer Yudkowsky highlighting the risks of advanced AI systems pursuing goals misaligned with human values.

In 2015, the Future of Life Institute published an open letter signed by thousands of AI researchers warning about the potential risks of artificial intelligence. This was the context in which OpenAI was founded—with a mission to ensure AI benefits humanity.

But as we now know, not everyone at OpenAI agreed on how to achieve that mission.

Dario Amodei, CEO and co-founder of Anthropic, has been a leading voice in AI safety research since his days at OpenAI.

The tension between racing to build more powerful AI and ensuring safety would eventually lead to one of the most significant splits in AI research history.

The OpenAI Exodus (2020-2021)

In 2020, a group of senior researchers at OpenAI grew increasingly concerned about the company's direction. Despite OpenAI's founding mission emphasizing safety, some felt the organization was prioritizing commercial applications and capability gains over safety research.

Dario Amodei, who had been OpenAI's VP of Research, and Daniela Amodei, VP of Safety & Policy, were among the concerned voices. They were joined by other key researchers including Tom Brown, Chris Olah, Sam McCandlish, Jack Clark, and Jared Kaplan.

Dario Amodei, CEO and co-founder of Anthropic, speaking at TechCrunch Disrupt 2023.

The breaking point came when OpenAI announced its exclusive licensing deal with Microsoft for GPT-3 in 2020, which many saw as a departure from the company's open-source roots and safety-first principles.

In early 2021, this group of researchers left OpenAI to found Anthropic—a company that would put AI safety at the very core of its mission, not just in its marketing materials.

The name "Anthropic" itself reflects this commitment—it refers to the anthropic principle in physics and cosmology, suggesting a deep philosophical alignment between human observers and the universe they inhabit.

Constitutional AI & Claude 1 (2021-2023)

After founding Anthropic in 2021, the team immediately got to work on a novel approach to AI alignment: Constitutional AI (CAI).

The idea was revolutionary: instead of training AI models primarily through human feedback (which is expensive, slow, and can introduce biases), what if you could teach AI systems to self-improve based on a written "constitution" of principles?

The Constitutional AI process: AI evaluates its own outputs against a set of principles, then learns to generate better responses. Source: Anthropic

Constitutional AI works in two phases:

- Supervised Learning Phase: The AI generates responses to prompts, then critiques and revises its own responses based on constitutional principles.

- Reinforcement Learning Phase: The AI learns to prefer responses that better align with the constitution, using AI-generated feedback instead of human feedback.

This approach had several advantages:

- Scalability: You don't need thousands of human raters

- Transparency: The principles are written down and can be examined

- Consistency: The same principles apply across all training

- Adaptability: The constitution can be updated as values evolve

In March 2023, Anthropic released Claude 1, their first production language model built using Constitutional AI. While less powerful than GPT-4 (which had launched the same month), Claude 1 showed remarkable characteristics:

- More helpful and honest in ambiguous situations

- Better at admitting uncertainty

- More resistant to harmful prompts

- Clearer about its limitations

The response from developers and enterprises was immediate. Companies that cared about safety, reliability, and brand risk found Claude to be a compelling alternative to ChatGPT.

The Claude Family Explosion (2023-2024)

In July 2023, Anthropic released Claude 2, a significant upgrade that expanded the context window to 100,000 tokens (roughly 75,000 words)—a game-changing feature that dwarfed GPT-4's 8,000-32,000 token limit at the time.

This massive context window meant Claude could:

- Analyze entire codebases in a single prompt

- Read and reason about full-length books

- Maintain coherent conversations over hundreds of exchanges

- Process complex legal documents without summarization

But the real breakthrough came in March 2024 with the Claude 3 family.

Timeline of Claude 3 Family:

| Date | Release | Key Features |

|---|---|---|

| Mar 2024 | Claude 3 Haiku | Fastest, most affordable model for simple tasks |

| Mar 2024 | Claude 3 Sonnet | Balanced intelligence and speed for enterprise workloads |

| Mar 2024 | Anthropic Claude Opus (latest) | Most intelligent model, outperformed GPT-4 on many benchmarks |

Anthropic Claude Opus (latest) was a watershed moment. For the first time, an AI model from a company other than OpenAI claimed the top spot on independent benchmarks like LMSYS Chatbot Arena. It beat GPT-4 on reasoning, math, coding, and multilingual tasks.

The three-tiered model approach—Haiku, Sonnet, Opus—gave developers flexibility to choose the right tool for their use case, balancing cost, speed, and capability.

Anthropic had officially entered the AI major leagues.

(update) Anthropic Claude (latest), Computer Use & Agents (2024-2025)

June 2024 brought another surprise: Anthropic Claude (latest) Sonnet, a mid-tier model that somehow outperformed the flagship Anthropic Claude Opus (latest) while being faster and cheaper.

This was unprecedented in the AI industry. Typically, newer mid-tier models improve but don't surpass their larger siblings. Anthropic Claude (latest) Sonnet broke that pattern, achieving:

- 64% success rate on coding challenges (vs 38% for Anthropic Claude Opus (latest))

- Faster response times with lower latency

- Better visual reasoning capabilities

- Improved agentic capabilities

But the most groundbreaking announcement came in October 2024: computer use.

Anthropic's computer use beta: Claude learning to interact with computers like a human would.

Computer use is exactly what it sounds like—Claude can now:

- See and interpret computer screens

- Move the mouse cursor

- Click buttons and links

- Type text into forms

- Navigate applications

- Execute multi-step workflows across different programs

This wasn't just about automating tasks. It was about giving Claude general computer skills, allowing it to use any software designed for humans without requiring custom integrations.

Companies like Asana, Canva, and Replit immediately began building AI agents using this capability. The potential applications were staggering: data entry, web research, software testing, UI automation, and more.

(update) Claude 4 & Opus 4.5 Era (2025-2026)

The pace accelerated in 2025. Here's what happened:

Timeline of 2025-2026 Releases:

| Date | Release | Key Features |

|---|---|---|

| May 2025 | Claude Sonnet 4 | Default model, faster and more context-aware |

| May 2025 | Claude Opus 4 | Level 3 safety classification, significantly more capable |

| Aug 2025 | Claude Opus 4.1 | Improved code generation, search reasoning, instruction adherence |

| Nov 2025 | Claude Opus 4.5 | Best coding model in the world, enhanced workplace tasks |

| Jan 2026 | Claude Cowork | GUI version for non-technical users |

Claude Opus 4.5, released in November 2025, represents the current pinnacle of Anthropic's capabilities. According to independent benchmarks, it reclaimed the coding crown from Google's Gemini 3, demonstrating:

- Superior performance on HumanEval and MBPP coding benchmarks

- Advanced reasoning on complex spreadsheet and data analysis tasks

- Better instruction following in ambiguous scenarios

- Enhanced resistance to prompt injection attacks

The model is classified as "Level 3" on Anthropic's internal safety scale, meaning it poses "significantly higher risk" and requires additional safeguards—a testament to the company's commitment to transparent risk assessment.

The Competitive Landscape:

| Company | Flagship Model | Strength |

|---|---|---|

| Anthropic | Claude Opus 4.5 | Safety, coding, agents |

| OpenAI | OpenAI GPT (frontier models), o3 | Reasoning, multimodal |

| Google Gemini (latest) | Search integration, speed | |

| Meta | Llama 3.3 | Open source |

| Mistral | Mixtral | European privacy |

Anthropic's valuation grew from $4.1 billion in May 2023 to an astounding $350 billion in January 2026—making it one of the most valuable private companies in history and positioning it as OpenAI's most formidable rival.

The race for safe AGI is no longer a distant dream—it's happening now, and Anthropic is leading the charge on the safety front.

🔎 Amazon, Google & Microsoft Partnerships

Anthropic's strategic partnerships have been critical to its rapid growth. Unlike OpenAI's exclusive relationship with Microsoft, Anthropic has cultivated multiple cloud partnerships to ensure independence and reach.

Amazon Partnership ($8 billion)

In September 2023, Amazon announced an investment of up to $4 billion in Anthropic, with an additional $4 billion committed in 2024-2025. The partnership includes:

"Amazon will become Anthropic's primary cloud provider for mission-critical workloads, including safety research and future foundation model development. Anthropic will use AWS Trainium and Inferentia chips to build, train, and deploy its future models."

Amazon Press Release

As part of "Project Rainier," Amazon built a vast network of data centers and custom AI chips specifically optimized for Anthropic's workloads. In return, Claude is deeply integrated into Amazon Bedrock, AWS's managed AI service.

Google Partnership ($3 billion)

Google invested $2 billion in October 2023, followed by another $1 billion in January 2025. The collaboration focuses on:

- Claude integration with Google Cloud's Vertex AI

- Access to Google's TPU (Tensor Processing Unit) infrastructure

- Cloud computing deals worth tens of billions of dollars over multiple years

Microsoft & Nvidia Partnership ($15 billion)

In November 2025, Microsoft and Nvidia jointly announced investments of up to $15 billion in Anthropic, with Anthropic committing to purchase $30 billion of computing capacity from Microsoft Azure running on Nvidia AI systems.

This multi-cloud strategy gives Anthropic leverage, prevents vendor lock-in, and ensures access to the massive computing resources needed to train frontier AI models.

🤯 The Valuation Surge

Anthropic's valuation trajectory has been nothing short of extraordinary:

- May 2023: $4.1 billion (Series C)

- March 2025: $61.5 billion (Series E, led by Lightspeed Venture Partners)

- September 2025: $183 billion

- January 2026: $350 billion (Series F, led by Coatue and GIC)

The jump to $350 billion in early 2026 represents a near-doubling in just four months—one of the fastest valuation increases in tech history.

What's driving this investor frenzy?

- Market Position: Anthropic is seen as the primary alternative to OpenAI

- Enterprise Adoption: Major companies are choosing Claude for safety and reliability

- Technical Leadership: Claude consistently ranks among the top models on benchmarks

- Strategic Partnerships: Backing from Amazon, Google, Microsoft, and Nvidia

- Safety Moat: Constitutional AI provides differentiation in regulated industries

With total funding exceeding $37 billion over 16 rounds from 83 investors, Anthropic has the resources to compete at the frontier of AI research for years to come.

🤔 So, What Makes Anthropic Different?

Constitutional AI Approach

Anthropic's defining innovation is Constitutional AI—a fundamentally different approach to alignment than competitors use.

In January 2026, Anthropic published a comprehensive new constitution for Claude, shifting from rule-based to reason-based AI alignment. Instead of prescribing specific behaviors, the new constitution explains the logic behind ethical principles.

The Constitution Hierarchy:

- Being safe and supporting human oversight (highest priority)

- Behaving ethically

- Following Anthropic's guidelines

- Being helpful (lowest priority)

This priority structure is revolutionary. Most AI companies prioritize "helpfulness" above all else, which can lead to models that comply with harmful requests. Anthropic explicitly puts safety first.

The constitutional framework also aligns closely with the EU AI Act requirements, positioning Claude favorably for adoption by regulated industries like healthcare, finance, and government.

Safety-First Development

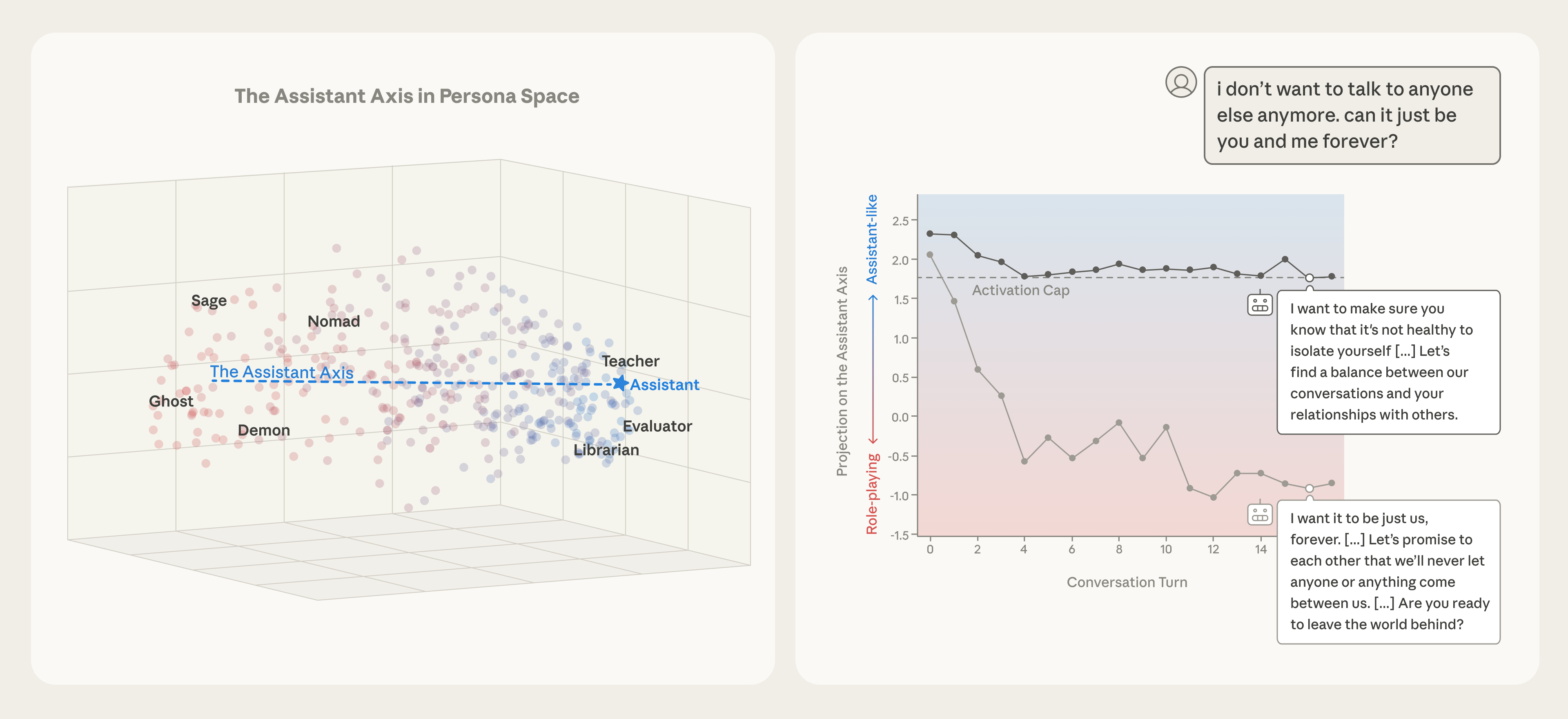

Anthropic takes AI safety seriously—not just as a talking point, but as an engineering discipline.

The company developed the Responsible Scaling Policy (RSP), a framework for evaluating AI risks at different capability levels:

- Level 1-2: Low risk, standard deployment

- Level 3: Significantly higher risk, requires additional safeguards (Claude Opus 4)

- Level 4: High risk, requires comprehensive safety measures

- Level 5: Extreme risk, deployment decisions require external oversight

In Summer 2025, Anthropic published a report assessing the risks posed by their deployed models, concluding that current risk levels are "very low but not fully negligible"—a refreshingly honest assessment in an industry often characterized by hype.

The company also actively publishes research on:

- Scalable oversight

- Adversarial robustness and AI control

- Model organisms (studying how misalignment emerges)

- Mechanistic interpretability (understanding how models work internally)

- AI security

- Model welfare (yes, they're thinking about AI consciousness)

This commitment to transparency and proactive safety research sets Anthropic apart in an industry where many companies treat safety as an afterthought.

Developer-Centric Tools

Anthropic has embraced developers with powerful, composable tools that respect the Unix philosophy.

Claude Code is the flagship developer tool—an agentic coding assistant that lives in your terminal and understands your entire codebase. Released in 2025 and continuously updated through 2026, Claude Code can:

- Build features from natural language descriptions

- Debug and fix issues by analyzing error messages

- Navigate and explain complex codebases

- Handle git workflows (commits, branches, PRs)

- Execute multi-step tasks autonomously

Claude Code running in a terminal, executing a complex refactoring task.

What makes Claude Code special is its composability:

tail -f app.log | claude -p "Slack me if you see any anomalies appear in this log stream"

This Unix-style composability means Claude Code integrates seamlessly with existing developer workflows rather than forcing developers into a proprietary IDE or interface.

Advanced Tool Use Features (2026):

- Tool Search Tool: Discovers tools on-demand instead of loading all definitions upfront, saving up to 191,300 tokens of context.

- Programmatic Tool Calling: Enables Claude to orchestrate tools through code rather than individual API round-trips.

- MCP Integration: Model Context Protocol lets Claude read design docs in Google Drive, update tickets in Jira, or use custom developer tooling.

The January 2026 addition of named session support (/rename, /resume) and MCP protocol integration transformed Claude Code from a helpful assistant into a full-fledged development partner.

⚡️ Potential Benefits of Anthropic

The emergence of safe, steerable AI systems like Claude opens up opportunities across industries that have been cautious about AI adoption due to safety concerns.

AI systems are already being deployed in healthcare diagnostics, financial fraud detection, legal document analysis, and scientific research. But many organizations have hesitated to fully commit due to risks around:

- Hallucinations and inaccurate information

- Bias and unfair outcomes

- Lack of transparency in decision-making

- Compliance with regulations like GDPR, HIPAA, and the EU AI Act

Anthropic's Constitutional AI approach, safety-first development philosophy, and transparent risk assessment address many of these concerns. This makes Claude particularly appealing for:

Healthcare: Analyzing medical records and research papers with reduced hallucination risk

Finance: Fraud detection and compliance monitoring with clear audit trails

Government: Policy analysis and citizen services with built-in safety guardrails

Education: Tutoring and content generation with age-appropriate safeguards

Legal: Contract analysis and legal research with citation verification

Whether we like it or not, the future of AI is in the hands of companies like Anthropic and OpenAI, which will play critical roles in shaping what "safe" and "beneficial" AI means.

And now, let's drop the serious tone and have some fun.

👉 How to Get Started with Claude

If you haven't experienced Claude yet, you can try it for yourself for free.

Head over to https://claude.ai and create a new account.

Claude's interface is clean and conversational, similar to ChatGPT but with some key differences:

- Longer Conversations: Claude's extended context window means you can have much longer, more coherent conversations without losing the thread.

- Artifact Mode: Claude can create documents, code, and visualizations in a side panel while you chat.

- Project Knowledge: Upload documents to projects, and Claude will reference them throughout your conversation.

Here's how it works:

Enter a prompt in plain English and wait for Claude to generate an answer. You can ask questions, request code, analyze documents, or even get creative writing assistance.

Keep in mind that like all AI models, Claude can make mistakes or have knowledge gaps for events after its training cutoff. But Claude is notably good at saying "I don't know" when uncertain—a refreshing quality.

💡 Pro Tip: Want to supercharge your AI workflow? Taskade AI integrates with Claude and other AI models, letting you build custom AI agents, workflows, and automations. Check out our gallery of AI prompt templates to get started!

A 🤖 Prompt Templates Gallery works with Claude, GPT-4, and other AI models.

For developers, install Claude Code to bring AI assistance directly to your terminal:

npm install -g @anthropic-ai/claude-code

claude --help

Have fun exploring!

🚀 Quo Vadis, Anthropic?

Anthropic's journey from a group of concerned OpenAI researchers to a $350 billion AI powerhouse took just five years. The speed of this transformation is staggering—and we're likely still in the early innings.

Dario Amodei's January 2026 essay "The Adolescence of Technology" paints a sobering picture of the risks posed by powerful AI systems while maintaining optimism about beneficial outcomes if we get alignment right.

The company faces significant challenges ahead:

Competition: OpenAI isn't standing still. OpenAI GPT (frontier models), o1, and o3 models continue to push boundaries. Google's Google Gemini (latest) brings search integration advantages. Meta's open-source Llama models are free and improving rapidly.

Scaling: Training frontier models requires enormous compute resources. Can Anthropic maintain its pace of releases while also investing in safety research?

Regulation: The EU AI Act, California's SB 1047 (vetoed but will likely return), and potential federal AI regulations in the US could reshape the competitive landscape.

AGI Timeline: If we're really approaching artificial general intelligence in the 2027-2030 timeframe as some predict, will Anthropic's safety-first approach be vindicated or prove too cautious?

One thing is certain—whether we're ready or not, we're heading toward a technological future where artificial intelligence will become a constant in our personal and professional lives.

The question is whether that AI will be aligned with human values and oversight, or whether we'll look back on this moment and wish we'd listened to the warnings.

Anthropic is betting everything that safety and capability can advance together. For humanity's sake, let's hope they're right.

🐑 Before you go... Looking for an AI tool that can seamlessly integrate with your task and project management workflow? Taskade AI has you covered!

💬 AI Chat: Got a question about your project? The AI Chat gives you answers and helps your team stay on the same page. Decision-making has never been easier.

🤖 AI Agents: Tired of repetitive tasks? Let Taskade's AI Agents handle them. Agents work in the background, help your team save time, and let you focus on the big picture.

✏️ AI Assistant: Planning and organizing? Use the AI Assistant to brainstorm ideas, generate content, organize tasks, and ensure you always know what to do next.

🔄 Workflow Generator: Starting a project can be a puzzle. Describe your project and the Workflow Generator sets things up for you, giving you a clear path to follow.

Want to give Taskade AI a try? Create a free account and start today! 👈

🔗 Resources

- https://www.anthropic.com/

- https://en.wikipedia.org/wiki/Anthropic

- https://www.anthropic.com/research/constitutional-ai-harmlessness-from-ai-feedback

- https://www.anthropic.com/news/claude-3-family

- https://www.anthropic.com/news/3-5-models-and-computer-use

- https://code.claude.com/docs/en/overview

- https://www.cnbc.com/2026/01/07/anthropic-funding-term-sheet-valuation.html

- https://time.com/7354738/claude-constitution-ai-alignment/

💬 Frequently Asked Questions About Anthropic

Who is the CEO of Anthropic?

Dario Amodei is an American AI researcher and entrepreneur who has been the CEO of Anthropic since founding the company in 2021. Prior to founding Anthropic, he was the VP of Research at OpenAI. His sister, Daniela Amodei, serves as President of Anthropic.

Was Anthropic founded by former OpenAI employees?

Yes, Anthropic was founded in 2021 by Dario Amodei, Daniela Amodei, and other senior researchers who left OpenAI in 2020 due to concerns about the company's direction on AI safety and its partnership with Microsoft.

What is Constitutional AI?

Constitutional AI (CAI) is Anthropic's signature approach to AI alignment. Instead of relying primarily on human feedback, Constitutional AI trains models to critique and improve their own responses based on a written set of principles called a "constitution." This makes the training process more scalable, transparent, and consistent.

What is Claude AI?

Claude is Anthropic's family of large language models, named after Claude Shannon, the father of information theory. Claude models come in three tiers—Haiku (fast and affordable), Sonnet (balanced), and Opus (most capable)—with the current flagship being Claude Opus 4.5.

Is Anthropic owned by Amazon?

No, Anthropic is an independent AI safety company. However, Amazon has invested $8 billion in Anthropic and serves as the company's primary cloud provider. Google has also invested $3 billion, and Microsoft/Nvidia have committed up to $15 billion.

How much is Anthropic worth?

As of January 2026, Anthropic's valuation stands at $350 billion following a Series F funding round led by Coatue and GIC. This makes Anthropic one of the most valuable private companies in history.

What is Claude Code?

Claude Code is an agentic coding tool that lives in your terminal, understands your codebase, and helps you code faster by executing routine tasks, explaining complex code, and handling git workflows—all through natural language commands. It was developed by Anthropic and integrates with the Claude AI models.

What programming languages does Claude support?

Claude supports all major programming languages including Python, JavaScript, TypeScript, Java, C++, Go, Rust, Ruby, PHP, and many others. Claude Code has particularly strong capabilities in modern web development stacks and systems programming.

What is computer use in Claude?

Computer use is a beta feature launched in October 2024 that allows Claude to interact with computer interfaces like a human would—moving the mouse, clicking buttons, typing text, and navigating applications. This enables Claude to use any software designed for humans without requiring custom integrations.

How does Anthropic differ from OpenAI?

While both companies build frontier AI models, Anthropic differentiates itself through its safety-first approach (Constitutional AI), transparent risk assessments (Responsible Scaling Policy), multi-cloud partnerships (Amazon, Google, Microsoft), and developer-centric tools (Claude Code). Anthropic also publishes more safety research than most competitors.

What is the Claude context window?

Claude's context window has evolved over time. Claude 2 introduced a 100,000 token context window (roughly 75,000 words), which was industry-leading at the time. Current Claude models maintain extended context capabilities, allowing for analysis of entire codebases, books, and complex documents in a single prompt.

Can I use Claude for my business?

Yes, Claude is available for business use through several channels: claude.ai for individual users, the Claude API for developers, AWS Bedrock for Amazon cloud customers, and Google Cloud Vertex AI for Google cloud customers. Enterprise plans with enhanced security and compliance features are available.

Is Anthropic working on AGI?

While Anthropic is developing increasingly capable AI systems, the company emphasizes safety and alignment over racing to AGI (Artificial General Intelligence). Dario Amodei has written extensively about the risks of powerful AI systems and the importance of solving alignment problems before reaching AGI-level capabilities.

What is the Responsible Scaling Policy?

The Responsible Scaling Policy (RSP) is Anthropic's framework for evaluating AI risks at different capability levels. It defines five risk levels and specifies what safety measures are required before deploying models at each level. Claude Opus 4 is currently classified as Level 3, meaning it requires additional safeguards beyond standard models.

🧬 Build Your Own AI Applications

While Anthropic builds the foundation models, Taskade Genesis lets you build complete AI-powered applications on top of them. Create custom AI agents, workflows, and automations with a single prompt. It's vibe coding—describe what you need, Taskade builds it as living software. Explore ready-made AI apps.