What is Google Gemini? Complete History: DeepMind, Bard, Multimodal AI & The Race to AGI (2026)

The complete history of Google Gemini from DeepMind acquisition to Bard launch, the Brain-DeepMind merger, and Gemini 3's breakthrough. Updated January 2026.

On this page (23)

Google Gemini is Google's family of multimodal AI models designed to compete with OpenAI's GPT and Anthropic's Claude. What started as two separate AI research groups—Google Brain and DeepMind—merged in 2023 to create Google DeepMind, launching Gemini and transforming Google's Bard chatbot into a true ChatGPT competitor.

But where did it all start? What makes Gemini different from ChatGPT? How did Google catch up after lagging behind? In today's article, we explore the complete history of Google's AI journey and the multimodal future. 🧠

🤖 What Is Google Gemini?

Google Gemini is a family of multimodal large language models developed by Google DeepMind, first launched in December 2023. The name "Gemini" refers to both the AI models themselves and the consumer chatbot interface that replaced Google Bard in February 2024.

"Gemini was built from the ground up to be multimodal, which means it can generalize and seamlessly understand, operate across and combine different types of information including text, code, audio, image and video."

Sundar Pichai, CEO of Google and Alphabet

Gemini comes in multiple tiers optimized for different use cases:

- Gemini 3 Ultra: Most capable model for complex reasoning (subscription)

- Gemini 3 Pro: Balanced performance for general tasks

- Gemini 3 Flash: Fast, cost-efficient for high-volume applications

- Gemini Nano: On-device AI for Android phones and Chrome

By January 2026, Google Gemini had achieved:

- 18% market share (up from near-zero in 2023)

- Gemini 3 broke 1500 Elo on LMArena (first AI to do so)

- 1 million token context window (approximately 750,000 words)

- 800+ million weekly users across Google products

- Powers Google Search, Workspace, Android, Chrome

So let's rewind and see how Google went from AI laggard to serious contender.

🥚 The History of Google Gemini

The Early Days: Google Brain (2011-2017)

Google's AI journey began long before Gemini.

In 2011, Google Fellow Jeff Dean and Google Researcher Greg Corrado started the Google Brain project as a part-time research collaboration. Their goal: explore deep learning for Google's products.

The "Cat Paper" (2012):

Google Brain's breakthrough came with unsupervised learning research. The team trained a neural network on 10 million YouTube thumbnails without labels. The network learned to recognize cats—purely from pattern recognition.

This seems mundane now, but in 2012, it was revolutionary. AI could learn concepts without explicit programming.

Key Google Brain Achievements (2011-2017):

- TensorFlow (2015): Open-source machine learning framework (now industry standard)

- Transformer Architecture (2017): "Attention Is All You Need" paper—foundation of modern LLMs

- Google Translate: Neural machine translation (2016)

- Google Photos: Image recognition and search

- Smart Reply: AI-generated email responses

Google Brain became the R&D powerhouse for Google's AI integration across products.

But across the Atlantic, another AI lab was achieving even more remarkable breakthroughs.

DeepMind: From Chess to AlphaGo (2010-2016)

In 2010, Demis Hassabis, Shane Legg, and Mustafa Suleyman founded DeepMind in London with a mission to "solve intelligence, and then use that to solve everything else."

Hassabis was a fascinating founder:

- Child chess prodigy (second-best under-14 player in the world)

- Game designer (created Theme Park and Black & White)

- Neuroscientist (PhD from University College London)

Demis Hassabis, co-founder and CEO of DeepMind (now Google DeepMind), speaking about AI safety and AGI.

Early DeepMind Breakthroughs:

DQN (Deep Q-Network) - 2013:

DeepMind created an AI that learned to play 49 Atari games at superhuman levels—using only pixel inputs and game scores. No hand-crafted rules. Pure reinforcement learning.

Google Acquisition - 2014:

Google acquired DeepMind for $500 million. The condition: DeepMind would remain an independent unit in the UK, reporting directly to Google's CEO.

AlphaGo - 2016:

Then came the moment that shocked the world.

In March 2016, DeepMind's AlphaGo defeated Lee Sedol, one of the world's best Go players, 4-1. Go was considered unsolvable by brute-force AI due to its complexity (10^170 possible board positions—more than atoms in the universe).

AlphaGo defeating Lee Sedol in 2016—a watershed moment demonstrating AI's potential beyond narrow tasks.

AlphaGo didn't just win—it played moves that human grandmasters called "beautiful" and "creative." AI had transcended calculation into intuition.

AlphaFold (2020-2022):

DeepMind's next moonshot: protein folding.

For 50 years, predicting how proteins fold from their amino acid sequences was biology's grand challenge. AlphaFold solved it.

In 2020, AlphaFold achieved 90% accuracy on protein structure prediction. By 2022, it had predicted structures for 200+ million proteins—essentially every protein known to science.

This wasn't a game. It was science acceleration. Drug discovery, disease research, biotechnology—all transformed overnight.

The Parallel Tracks Problem (2017-2023)

By 2017, Google had two world-class AI research groups:

Google Brain (California):

- Focused on product integration

- Built TensorFlow, Google Translate, TPUs (Tensor Processing Units)

- Created LaMDA (Language Model for Dialogue Applications)

- Developed PaLM (Pathways Language Model)

DeepMind (London):

- Focused on fundamental AI research

- Built Gopher (language model)

- Created Sparrow (dialogue model with safety constraints)

- Achieved AlphaGo, AlphaFold, AlphaStar (StarCraft II)

The problem: redundant effort. Both teams built large language models independently. In a resource-constrained AI race, this was inefficient.

Then OpenAI launched ChatGPT in November 2022—and everything changed.

The ChatGPT Wake-Up Call (2022-2023)

ChatGPT's viral success caught Google off-guard.

Within 5 days, ChatGPT had 1 million users. Within 2 months, 100 million—the fastest-growing consumer app in history.

Google scrambled. In February 2023, they announced Bard—Google's answer to ChatGPT.

The Bard Launch Disaster:

During a live demo, Bard answered a question about the James Webb Space Telescope incorrectly, saying it took the first pictures of a planet outside our solar system. (It didn't—ground-based telescopes did that first.)

The mistake cost Alphabet $100 billion in market cap in a single day.

Bard launched in March 2023, but initial reviews were harsh:

- Slower than ChatGPT

- Less accurate

- More restricted (safety guardrails too aggressive)

- Awkward user experience

Google was losing the AI race—badly.

The Merger Decision:

In April 2023, Google made a bold move: merge Google Brain and DeepMind into a single unit: Google DeepMind.

Leadership:

- Demis Hassabis: CEO of Google DeepMind

- Jeff Dean: Chief Scientist reporting to Sundar Pichai

The rationale: combine DeepMind's fundamental research with Google Brain's product expertise to create the most capable AI systems.

(update) Gemini Launch and Evolution (2023-2024)

In December 2023, Google launched Gemini 1.0—the first AI model built multimodal from the ground up.

What Makes Gemini Multimodal:

Unlike GPT-4 (text trained separately from vision), Gemini was trained simultaneously on text, images, audio, video, and code. This allows Gemini to:

- Understand a video, listen to audio, and read comments together

- Reason across modalities (e.g., "What's unusual about this image?" while understanding context)

- Generate responses that reference visual, audio, and textual information seamlessly

Gemini 1.0 Family (December 2023):

| Model | Use Case | Capability |

|---|---|---|

| Gemini Ultra | Complex reasoning | Matches GPT-4 on benchmarks |

| Gemini Pro | General tasks | Replaces PaLM 2 in Bard |

| Gemini Nano | On-device AI | Runs on Pixel 8 phones |

Google Gemini (latest) (February 2024):

Just two months later, Google released Google Gemini Pro (latest) with a game-changing feature: 1 million token context window.

To put that in perspective:

- GPT-4: 32,000 tokens (~24,000 words)

- Claude 2: 100,000 tokens (~75,000 words)

- Google Gemini Pro (latest): 1,000,000 tokens (~750,000 words)

This meant Gemini could process:

- Entire codebases

- Full-length books

- Hours of video transcripts

- Complete research papers with citations

And it used a Mixture of Experts (MoE) architecture for efficiency—activating only relevant model sections for each query, making it faster and cheaper than larger monolithic models.

(update) Bard Becomes Gemini (February 2024)

In February 2024, Google made another strategic move: rebrand Bard to Gemini.

Why the Rebrand?

Sundar Pichai explained: "Bard was the most direct way people could interact with our models. It made sense to evolve it to Gemini because users were actually talking directly to the underlying Gemini model."

Translation: Google wanted a unified brand. "Gemini" now meant:

- The AI models (Gemini 1.0, 1.5, etc.)

- The chatbot interface (formerly Bard)

- Google Workspace AI features (formerly "Duet AI")

Gemini Mobile Apps:

- Android: Standalone Gemini app

- iOS: Integrated into Google app

Subscription Tiers:

- Free: Gemini Pro access

- Gemini Advanced ($19.99/mo): Gemini Ultra access, 2TB Google Drive, integration with Gmail/Docs

(update) Google Gemini (latest), 3.0 and the Race Heats Up (2024-2026)

The pace accelerated dramatically.

Google Gemini (latest) (December 2024):

Introduced Flash models optimized for speed and cost:

- Google Gemini Pro (latest): 3x faster than 1.5 Pro, better performance

- Google Gemini Pro (latest)-Lite: Ultra-low latency for high-volume apps

Gemini 3.0 (November 2025):

The breakthrough came with Gemini 3 Pro:

Benchmark Achievements:

- First AI to break 1500 Elo on LMArena

- 90.4% on GPQA Diamond (PhD-level science reasoning)

- 33.7% on Humanity's Last Exam (without tools)

- 78% on SWE-bench Verified (coding benchmark)

Shockingly, Gemini 3 Flash outperformed Pro on coding (78% vs 76.2%) while being 3x faster and significantly cheaper.

Gemini 3 Timeline:

| Date | Release | Key Features |

|---|---|---|

| Nov 2025 | Gemini 3 Pro | State-of-the-art reasoning, multimodal |

| Nov 2025 | Gemini 3 Flash | Beats Pro on coding, 3x faster |

| Dec 2025 | Computer Use Tool | Control computers like humans |

| Jan 2026 | Gemini 3 Deep Think | Extended reasoning for complex problems |

Market Impact:

By early 2026:

- Gemini captured 18% market share (up from <5% in 2023)

- ChatGPT dropped to 68% (from 87%)

- The ChatGPT-Gemini duopoly controls 86% of the LLM market

Competitive Landscape (2026):

| Model | Company | Strength | Weakness |

|---|---|---|---|

| Gemini 3 | Multimodal, integration, speed | Less creative writing | |

| GPT-5.2 | OpenAI | Reasoning, creativity | Expensive, slower |

| Claude Opus 4.5 | Anthropic | Safety, coding | Smaller ecosystem |

| Llama 3.3 | Meta | Open source, free | Less capable |

🔎 The Google Ecosystem Advantage

Unlike OpenAI and Anthropic, Google has a massive distribution advantage.

Gemini Integration Across Google:

- Google Search: AI Overviews powered by Gemini

- Gmail: Smart Compose, email summarization

- Google Docs: "Help me write" with Gemini

- Google Sheets: Formula generation, data analysis

- Google Meet: Real-time transcription, meeting summaries

- Android: Gemini Nano on-device AI

- Chrome: Built-in AI assistance

- Google Photos: Advanced search, editing suggestions

- YouTube: Content recommendations, auto-captioning

The Network Effect:

Every Gmail user, Docs user, and Android phone owner becomes a potential Gemini user—whether they realize it or not.

This is Google's moat: ambient AI. You don't go to Gemini—Gemini comes to you, embedded in tools you already use.

🤯 The Leadership Dynamic: Hassabis and Pichai

An interesting dynamic emerged in 2026: Demis Hassabis talks to Sundar Pichai every day.

Hassabis calls DeepMind "the engine room" of Google's AI efforts. All AI technology development happens at DeepMind, then diffuses across Google products.

The Succession Question:

Industry observers speculate that Hassabis is being positioned as Pichai's potential successor. His role at Google DeepMind places him in conversations about long-term succession planning.

Hassabis's unique background—chess prodigy, game designer, neuroscientist, AI researcher—makes him a compelling candidate for leading Google in an AI-first era.

But Pichai remains firmly in control, and no official statements about succession have been made.

🤔 So, What Makes Gemini Different?

Multimodal from the Ground Up

The key differentiator: Gemini was designed to be multimodal, not retrofitted.

GPT-4 Approach:

- Train text model (GPT-4)

- Add vision capabilities later (GPT-4V)

- Separate training pipelines

- Combined post-training

Gemini Approach:

- Train on text, images, video, audio simultaneously

- Shared understanding across modalities

- Native multimodal reasoning

- No separate vision "add-on"

This architectural difference enables Gemini to:

- Answer questions about videos naturally

- Understand context across images and text

- Generate responses referencing visual elements

- Reason about code, diagrams, and documentation together

Google Gemini multimodal demo — real-time understanding across text, images, video, and code.

The Context Window Advantage

Gemini's 1 million token context window enables use cases impossible for competitors:

Real-World Applications:

- Legal Document Analysis: Process entire contracts with exhibits

- Codebase Understanding: Analyze repositories with thousands of files

- Research Synthesis: Digest multiple papers with full citations

- Video Analysis: Process hours of content for insights

- Customer Support: Maintain conversation history for complex issues

Claude offers similar context length, but Gemini's integration with Google Workspace makes it more accessible to enterprise users.

Speed and Cost Efficiency

Gemini 3 Flash represents a paradigm shift: a cheaper, faster model that beats the premium model on key benchmarks.

Gemini 3 Flash vs Pro:

- 3x faster response times

- 78% vs 76.2% on coding benchmarks (Flash wins!)

- 30% fewer tokens on average for tasks

- $0.50 vs $2.00 per million input tokens (75% cheaper)

This inverts the traditional AI model economics: you don't have to choose between quality and cost anymore.

⚡️ Potential Benefits of Google Gemini

Democratizing AI Through Integration

Google's strategy: make AI invisible by embedding it everywhere.

For Consumers:

- Gmail writes better emails automatically

- Google Photos organizes memories intelligently

- Android phones understand voice commands naturally

- Search answers questions directly

For Businesses:

- Google Workspace becomes AI-augmented

- Customer support automates with Gemini

- Data analysis happens in Sheets with natural language

- Content creation scales with AI assistance

For Developers:

- Google AI Studio provides free prototyping

- Gemini API offers competitive pricing

- Integration with Google Cloud simplifies deployment

- Multi-modal capabilities unlock new applications

Accelerating Scientific Research

DeepMind's heritage shows in Gemini's scientific capabilities:

AlphaFold Integration:

- Gemini can reason about protein structures

- Suggests drug candidates for diseases

- Accelerates biotech research

Climate Modeling:

- Google's AI predicts weather patterns

- Optimizes renewable energy usage

- Models climate change scenarios

Materials Science:

- Discovers new materials for batteries

- Designs more efficient solar cells

- Accelerates physics simulations

Concerns and Criticisms

Not everyone trusts Google with AI dominance:

Privacy Concerns:

- Gemini integrated with Gmail/Docs sees sensitive data

- What does Google do with this information?

- Can you truly opt-out if it's built into products you rely on?

Monopoly Risks:

- Google already dominates search (90%+ market share)

- Adding AI to search further entrenches dominance

- Competitors (Bing, DuckDuckGo) struggle to keep up

Bias and Safety:

- Gemini's image generation faced criticism for historical inaccuracies

- Overcorrection for diversity led to factually wrong outputs

- Google had to pause Gemini's image generation feature temporarily

Google's response: continuous improvement on safety, transparency reports, and external audits. But trust remains a challenge.

👉 How to Get Started with Gemini

Ready to experience Google's AI? Here's how to start:

For Consumers:

- Go to https://gemini.google.com

- Sign in with your Google account

- Start chatting with Gemini Pro (free tier)

- Upgrade to Gemini Advanced ($19.99/mo) for Ultra access

For Developers:

- Visit https://ai.google.dev/aistudio

- Create a Google AI Studio account (free)

- Prototype prompts with Gemini models

- Generate API keys for your apps

- Deploy using the Gemini API

Pricing (2026):

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| Gemini 3 Flash | $0.50 | $3.00 |

| Gemini 3 Pro | $2.00-$4.00 | $12.00-$18.00 |

| Gemini 3 Ultra | Subscription only | $19.99/month |

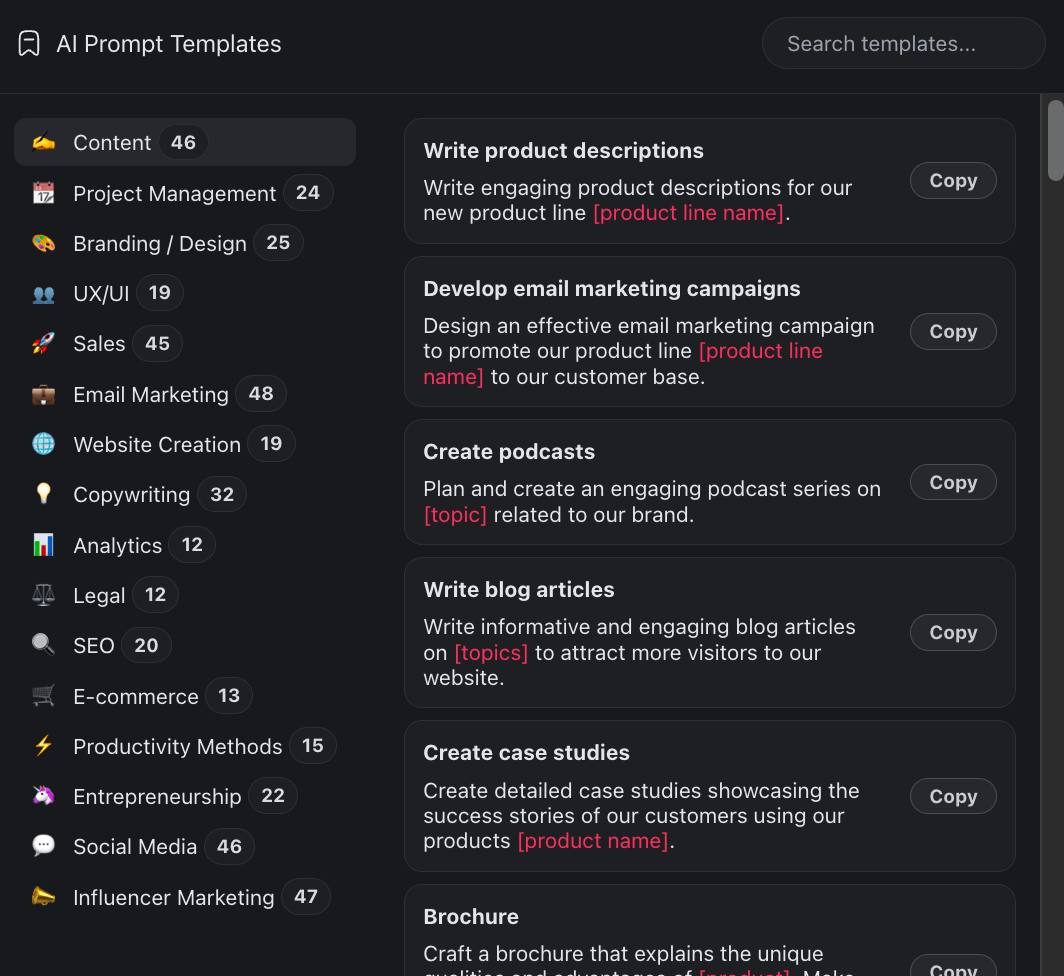

💡 Pro Tip: Building AI workflows? Taskade AI integrates with Gemini and other AI models to manage your projects, automate tasks, and coordinate teams. Use Gemini for intelligence, Taskade for execution!

Taskade prompt templates work with Gemini, ChatGPT, and Claude.

Tips for Success:

- Use Gemini Advanced for complex reasoning tasks

- Leverage the 1M token context for long documents

- Try multimodal prompts (upload images with text questions)

- Integrate with Google Workspace for productivity gains

- Use Flash for high-volume, cost-sensitive applications

🚀 Quo Vadis, Google Gemini?

From two separate AI labs in 2011 to a unified Google DeepMind in 2023, and now to 18% market share in 2026—Google's AI journey has been turbulent but successful.

The Strategic Questions:

Can Google catch OpenAI?

Google has advantages:

- Distribution: 2 billion+ users across products

- Infrastructure: Google Cloud, TPUs, global data centers

- Talent: DeepMind researchers are world-class

- Data: Search, YouTube, Gmail provide training advantages

But OpenAI has momentum:

- ChatGPT mindshare: Still the default AI for most people

- Developer ecosystem: More integrations, more startups building on GPT

- Reasoning models: o1 and o3 push boundaries

Will Gemini become the default AI?

Google's ambient AI strategy—embedding Gemini everywhere—could make it ubiquitous even if people don't seek it out explicitly.

You may not say "I'm using Gemini," but:

- Your emails autocomplete with it

- Your photos organize with it

- Your searches answer with it

- Your phone understands you through it

That's arguably more powerful than conscious adoption.

What about AGI?

Demis Hassabis has been explicit: DeepMind's mission is to "solve intelligence, then use that to solve everything else."

Whether that happens in 2026, 2030, or 2040—Google DeepMind is positioning itself to be there first.

🐑 Before you go... Looking for a way to coordinate your AI projects across Google Workspace? Taskade AI works seamlessly with Gemini!

💬 AI Chat: Manage projects, track tasks, and keep your team aligned while using Gemini for intelligence.

🤖 AI Agents: Automate repetitive workflows while Gemini handles the reasoning.

✏️ AI Assistant: Plan features, organize research, and document insights from Gemini.

🔄 Workflow Generator: Create structured processes that integrate Gemini's capabilities with your team's workflow.

Want to give Taskade AI a try? Create a free account and start today! 👈

🔗 Resources

- https://gemini.google.com/

- https://ai.google.dev/

- https://en.wikipedia.org/wiki/Google_Gemini

- https://en.wikipedia.org/wiki/Google_DeepMind

- https://deepmind.google/

- https://blog.google/technology/ai/google-gemini-ai/

- https://www.cnbc.com/2023/04/20/alphabet-merges-ai-focused-groups-deepmind-and-google-research.html

- https://ai.google.dev/gemini-api/docs/

💬 Frequently Asked Questions About Google Gemini

What is Google Gemini?

Google Gemini is a family of multimodal large language models developed by Google DeepMind, first launched in December 2023. Gemini can understand and generate text, images, audio, video, and code. The name also refers to Google's AI chatbot (formerly Bard) and AI features across Google Workspace.

Is Gemini better than ChatGPT?

Gemini 3 and ChatGPT excel in different areas. Gemini is better at multimodal tasks, has a larger context window (1M tokens vs 128K), and integrates deeply with Google products. ChatGPT is often preferred for creative writing and has better memory features. Gemini 3 broke 1500 Elo on LMArena, marking it as extremely capable.

What is the difference between Bard and Gemini?

Bard was Google's chatbot launched in March 2023, initially powered by LaMDA and later by PaLM 2. In February 2024, Google rebranded Bard to Gemini to align with the underlying Gemini models. Gemini is more capable, multimodal, and integrated across Google products.

Is Google Gemini free?

Yes, Gemini offers a free tier with access to Gemini Pro models through the Gemini chatbot interface (gemini.google.com). For advanced features, Gemini Advanced costs $19.99/month and includes Gemini Ultra access, 2TB Google Drive storage, and integration with Gmail and Docs.

What is Google DeepMind?

Google DeepMind is the merged AI research lab created in April 2023 by combining Google Brain (founded 2011) and DeepMind (acquired 2014). Led by CEO Demis Hassabis, Google DeepMind develops Google's AI models including Gemini and is responsible for breakthroughs like AlphaGo and AlphaFold.

What is the Gemini context window?

Google Gemini Pro (latest) and Gemini 3 models support a 1 million token context window, which is approximately 750,000 words. This is significantly larger than ChatGPT's 128,000 tokens and allows Gemini to process entire books, large codebases, and hours of video transcripts in a single prompt.

How much does Gemini API cost?

Gemini 3 Flash costs $0.50 per million input tokens and $3.00 per million output tokens. Gemini 3 Pro costs $2.00-$4.00 per million input tokens and $12.00-$18.00 per million output tokens depending on context size. These prices make Gemini competitive with or cheaper than GPT-4 and Claude.

What is multimodal AI?

Multimodal AI can understand and generate multiple types of data—text, images, audio, video, and code—in a single model. Gemini was built multimodal from the ground up, meaning it was trained simultaneously on all these data types, allowing it to reason across modalities naturally.

Who is Demis Hassabis?

Demis Hassabis is the CEO of Google DeepMind, co-founder of DeepMind (acquired by Google in 2014), and a former chess prodigy, game designer, and neuroscientist. He leads Google's AI research and development efforts and is considered a potential future CEO of Google/Alphabet.

Can Gemini generate images?

Yes, Gemini can generate images through the Imagen integration. However, Google temporarily paused Gemini's image generation feature in early 2024 after criticism about historical inaccuracies and overcorrection for diversity. The feature was later relaunched with improvements.

How does Gemini compare to Claude?

Both Gemini 3 and Claude Opus 4.5 offer 1 million token context windows and strong performance. Claude generally has fewer hallucinations and is preferred for coding and research. Gemini excels at multimodal tasks and has deeper integration with Google's ecosystem. Gemini 3 Flash is significantly faster and cheaper than Claude while matching or exceeding performance on many benchmarks.

What is Gemini Advanced?

Gemini Advanced is Google's $19.99/month subscription that provides access to Gemini Ultra (the most capable model), priority access to new features, 2TB of Google One storage, and integration with Gmail, Docs, Sheets, and other Workspace apps. It competes with ChatGPT Plus and Claude Pro.

Does Gemini work offline?

Gemini Nano, a smaller version of Gemini, can run on-device on supported Android phones (Pixel 8 and newer, Samsung Galaxy S24 and newer). This enables AI features like smart replies and voice transcription to work offline. Full Gemini Pro and Ultra require internet connection.

What is Google AI Studio?

Google AI Studio is a browser-based development environment for prototyping with Gemini models. It's free to use, allows developers to test prompts, configure model parameters, and generate API code. AI Studio provides access to all Gemini models including experimental ones before they're publicly available.

🧬 Build Your Own AI Applications

While Google builds the multimodal AI models, Taskade Genesis lets you build AI-powered productivity applications on top of them. Create custom AI agents, project workflows, and team automations with a single prompt. It's vibe coding for productivity—describe what you need, Taskade builds it as living software. Explore ready-made AI apps.