Large language models (LLMs) like OpenAI‘s GPT-4 Turbo and Llama2 have changed the way we think, work, and collaborate. But even the most advanced AIs occasionally spiral down the rabbit hole of inaccuracies and imaginative detours (like that one friend who saw a UFO once). This is where Retrieval-Augmented Generation (RAG) comes into play.

So, what exactly is RAG? How does it enhance AI-generated responses? Why is it considered a breakthrough in dealing with the limitations of LLMs? Let’s find out.

But first, there is one more thing we need to talk about. 👇

🚧 Limitations of Current AI Language Models

When researchers at OpenAI released GPT-3 (generative pre-trained transformer) in June 2020, it caused quite a stir. Not necessarily because it was a novel thing. But because it far surpassed its older, more obscure sibling from two years prior, GPT-2.

GPT-3 boasted 175 billion parameters compared to GPT-2’s 1.5 billion. That and more diverse training data gave it a much better grasp of natural language, which translated to an ability to generate eerily human-like and contextually relevant text.

GPT-3 could do it all — draft legal documents, pen poetry, write code, or… generate recipe ideas. It was difficult not to get swept up in the excitement.

But as any other LLM — that also includes the most recent and far superior GPT-4 that’s rumored to have 1.76 trillion parameters — it had some flaws.

LLMs are only as good as their training data. This means that their capabilities are limited by the core “knowledge” acquired during the training process.

For instance, a model trained only up until 2019 wouldn’t know about the COVID-19 pandemic or the latest swings in climate change debates. It would also not understand the newest laws and regulation affecting your business.

Of course, LLMs can fill in the blanks in a very convincing way. But unless you’re drafting a post-apocalyptic fantasy novel, the output of an LLM needs a reality check.

🔀 What Is Retrieval Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is an AI technique that combines the generative capabilities of language models with a retrieval system that fetches external data.

Let’s say you’re drafting an analysis of the electric car market with the help of an AI-powered writing tool. It’s 2024, but the LLM that runs under the hood of the app was last updated in early 2021. The AI will give you an excellent overview, but the output will not include recent trends, like a major breakthrough in battery tech last month.

With RAG, you can point the LLM to relevant documentation or news articles to fill in the gaps. RAG will dynamically fetch and integrate the latest data directly into the AI’s output.

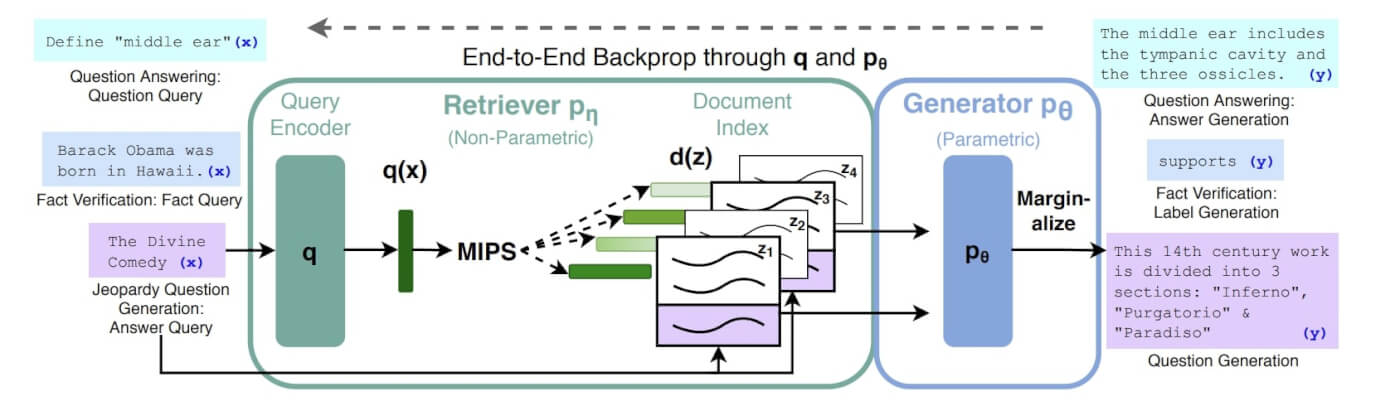

The history of RAG goes back to a paper titled “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” published in 2020 by the research team at Facebook.

In a nutshell, Retrieval-Augmented Generation is a two-step method. First, the “retriever” sources information relevant to a task from a provided pool of data. Then, the “generator” (the LLM itself) takes this information and crafts a contextually-relevant response.

This approach has a number of serious advantages.

🤖 The Benefits of RAG in AI

Training a language model isn’t cheap.

The exact cost? It’s hard to pin down given how secretive AI companies are. But here’s the scoop from the latest OpenAI Dev Day: the company is offering to train GPT-4 with your own data starting at $2-3M. That’s a lucrative business if we ever saw one.

Let’s make something clear. RAG does not replace the (costly) process of training a large language model. But it does open a world of interesting possibilities.

Imagine you’re a new user navigating an app for the first time.

There you are, eyes wide, finger poised over the mouse — “where do I start?”, ”where is the settings menu?” And as you accidentally click something that makes the screen flicker, a slight panic sets in. “Did I break something?”

An AI assistant integrated into the app and fine-tuned with user documentation could give you a guided tour. It will fetch the right set of instructions, give you a nudge in the right direction, tell you what you’re doing wrong, and suddenly, you’re in the know.

RAG makes all that possible with off-the-shelf AI models.

But the access to domain-specific sources of knowledge is one thing.

RAG also reduces the risk of model hallucination, a common issue for all commercial and open-source LLMs. Since the generation process is supported by real-world data, it leaves less leeway for a “creative” approach and confabulation.

Finally, RAG allows for more dynamic and accurate answers.

Typically, when a language model doesn’t understand a query or provides an inadequate response, you must rephrase the prompt over and over again. That’s a lot of experimentation for a tool that’s supposed to save you time and effort.

With RAG, you get faster and more precise results than with just the stock training data.

The question of the day is, where can RAG make the biggest impact?

⚒️ RAG in Practice: Applications and Use Cases

While the commercial success of LLMs is still fairly recent, there are hundreds of exciting ways you can use RAG in your personal and business projects.

Smarter Project Management

Starting a new project is an adventure.

You’re gearing up, doing the research, and assembling the right team for the job. You want it to be a hit, so you take cues from what’s worked before. Maybe you’re sifting through old tasks, trying to figure out who should do what and when.

Why not let AI do the heavy lifting?

An AI-powered project management app with RAG will dive into a sea of historical project data, pull out golden nuggets of past strategies, set realistic deadlines, allocate resources, assign team roles, and structure your project.

as “seeds” for the Workflow Generator.

Faster Content Creation

Large language models are excellent at generating content. With a few solid prompts, they can churn out comprehensive articles, reports, and other textual resources.

But there’s a catch: it’s all pretty generic at first.

There’s a slim chance that the first output will sound like you or fit the unique vibe of your brand. It takes time to fine-tune the tone, cadence, and nuances.

You can speed up this process with RAG.

Need to write another social media post?

You can simply cherry-pick your past successful posts, feed them to the LLM, and set it to generate fresh content using the style your audience loves. No more endless adjustments — just sharp, engaging copy that sounds just like you, only quicker.

spreadsheets, or online resources including YouTube videos.

Personalized Learning

Now, let’s consider a different scenario.

You’re a diligent student preparing for an exam. You have a ton of solid but unorganized notes. But you need to learn fast and can’t afford to get lost in the paper shuffle.

You can spend a couple of nights slouched over coffee-stained pages trying to connect the dots. Or you can set up a smart AI study buddy to help you out. All you need to do is upload your notes, provide links to other credible resources, and ask away.

🔮 The Future of Retrieval Augmented Generation

Tools based on Large Language Models (LLMs) like GPT-4 or Llama2 have already reshaped our work landscape. RAG pushes the boundaries even further by giving AI access to the latest, most relevant data for any task, project, or decision.

Before you go, let’s recap what we learned today:

- ⭕ More accurate responses: With RAG, off-the-shelf LLMs can deliver context-relevant and accurate answers without the need for retraining.

- ⭕ Real-time updates: RAG opens AI-powered tools to fresh data, which means that they can generate content based on the most current information.

- ⭕ Reduced hallucinations: By accessing up-to-date and accurate data, RAG minimizes the chances of AI producing irrelevant or incorrect information.

- ⭕ Specialized applications: Using external resources from various fields, from healthcare to finance, you can tailor AI’s responses to industry standards.

- ⭕ Affordability: RAG is an excellent solution for companies who can’t or don’t want to spend big bucks on retraining large language models.

Tired of constantly reprompting AI and bottlenecking your projects? We have a solution.

Taskade AI is the most complete suite of productivity tools, now powered by GPT-4 Turbo with RAG support — one platform for all your projects, tasks, notes, and collaboration.

🤖 Custom AI Agents: Create and deploy teams of AI agents, each with a unique personality and skills. Upload your documents and spreadsheets, or use external resources to amplify agents’ knowledge. Set agents to automate your tasks in the background, or call them when needed with custom AI commands.

🪄 AI Generator: Need to outline a document? Maybe you want to kickstart a project but don’t want to spend hours setting things up. Let Taskade AI do the heavy lifting and structure your work in seconds. Describe your work in natural language or upload seed documents and resources for more tailored results.

✏️ AI Assistant: Brainstorm, plan, outline, and write faster with smart suggestions powered by GPT-4 Turbo, right inside the project editor. Choose from a set of built-in /AI commands or tap into your custom agents for extra help.

📄 Media Q&A: Add your documents and spreadsheets to projects, and use Taskade AI to extract valuable insights. Start a Q&A session with AI and ask questions about document contents using a conversational interface.

And much more…

💬 People Also Ask

What is Retrieval Augmented Generation?

Retrieval Augmented Generation, or RAG, is an advanced AI technology that enhances the capabilities of Large Language Models (LLMs) by integrating real-time, external data. RAG ensures that AI responses are not only generated based on pre-learned data but are also dynamically updated with the latest information, making them more accurate and contextually relevant.

Who created the Retrieval Augmented Generation?

Retrieval Augmented Generation was conceptualized and developed by a team of researchers at Facebook AI Research (FAIR). The innovative approach was detailed in their 2020 paper titled “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks,” laying the groundwork for future advancements in AI and LLM technologies.

How does the RAG model work?

The RAG model works by combining the generative capabilities of LLMs with a retrieval mechanism. Essentially, when a query is inputted, RAG first retrieves relevant and up-to-date external data and then integrates this information to generate a more accurate and contextually relevant response. This two-step process involves continuously sourcing and updating its knowledge base from a wide array of external sources.

Is Retrieval Augmented Generation better than LLM?

Retrieval Augmented Generation is not necessarily better than traditional Large Language Models; rather, it’s an enhancement. While LLMs are powerful on their own, they are limited by the data they were trained on. RAG addresses this limitation by ensuring that the AI’s responses are continually updated with the latest information, making it a more dynamic, accurate, and reliable tool.

What are the benefits of Retrieval Augmented Generation?

The benefits of Retrieval Augmented Generation are manifold. It significantly increases the accuracy and relevance of AI responses by integrating the latest data. RAG reduces the frequency of AI hallucinations, improves the efficiency of information retrieval, and broadens the application of AI across various industries by providing more tailored and up-to-date solutions.

What are the limitations of Retrieval Augmented Generation?

Despite its numerous advantages, RAG has some limitations. It relies heavily on the availability and quality of external data sources, which if flawed or biased, can affect the accuracy of responses. The complexity of integrating RAG into existing systems can be high, and the process of continuously retrieving and processing large volumes of data requires robust computational power and can be resource-intensive.

Agentic AI Systems — The Next Evolution of Work

Agentic AI Systems — The Next Evolution of Work  AI Agent Builders: Empowering A World of Automation

AI Agent Builders: Empowering A World of Automation  How To Humanize AI Generated Content — Build An AI Agent That Does It For You

How To Humanize AI Generated Content — Build An AI Agent That Does It For You  From Distraction to Action: AI For ADHD Productivity

From Distraction to Action: AI For ADHD Productivity  12 Best AI Tools for Coding

12 Best AI Tools for Coding  Top 10 AI Influencers to Watch in 2024

Top 10 AI Influencers to Watch in 2024